Infibee Technologies offers India’s No.1 Hadoop Training in Hyderabad with global certification and complete placement guidance.

Kickstart your career with our Hadoop Course in Hyderabad, led by 10+ industry-experienced experts. Benefit from affordable fees, hands-on mock projects, resume preparation, interview coaching, and placement-focused training, along with lifetime access to recorded sessions of live classes. Learn the practical uses of Hadoop in big data processing, distributed storage, and analytics to advance your career in IT and data management.

Join our Hadoop Training in Hyderabad today and ignite your career with high-paying opportunities in top companies.

Live Online :

Get started on your professional career with Hadoop Training in Hyderabad, rendered by Infibee Technologies, to impart specialized knowledge in the field of big data operations, distributed storage, and analytics. The training profile provides techies and students with the capability of managing large-scale datasets, performing ETL processes, and maintaining data pipeline implementations in an efficient manner.

The Hadoop Course in Hyderabad is particularly useful for IT professionals, data engineers, and even fresh graduates aspiring to make it big in any of the roles under data management and analytics. Learners undergo hands-on training on Hadoop components such as HDFS, MapReduce, Hive, Pig, and Spark so that they may actively contribute to real big data projects on enterprise scales.

| Course Topics Covered | Applications of Hadoop Course | Tools Used |

|---|---|---|

| Hadoop Basics & Architecture | Big Data Analytics | Hadoop Distributed File System (HDFS) |

| HDFS, MapReduce, YARN | Data Warehousing | Hive |

| Pig, Sqoop & Flume | ETL Processing | Pig |

| Hive, HBase, and Spark | Real-time Data Processing | HBase |

| Spark & Spark SQL | Machine Learning & Data Science | Spark |

| Data Ingestion & Workflow Automation | Cloud Data Solutions | Sqoop, Flume |

India’s No.1 Hadoop Training in Hyderabad with placement assistance.

Hands-on training with real-time projects and case studies.

Guidance from 10+ industry experts with real-world experience.

Affordable fees with EMI options.

Resume preparation, mock interviews, and placement support.

Lifetime access to recorded sessions.

Flexible training modes: Classroom, Online, Corporate.

Located at the heart of Hyderabad, Infibee Technologies is the premier institute for Hadoop training in Hyderabad. Practically oriented training, hence, is provided at Infibee wherein students get to learn the skills of Big Data Management and Analytics.

Hadoop Course in Hyderabad includes HDFS, MapReduce, Pig, Hive, HBase, Spark, Sqoop, and Flume. They are placed on real-time projects to develop their hand skills, which give them the opportunity to analyze large data to different entities in a real-time scenario.

Infibee believes in career preparation, hence students get assistance in resume writing, mock interviewing, and placement. Our students are trained to design, build, and deploy big data solutions for IT organizations, startups, and analytical teams. This powerful approach proves that the student is fully capable of becoming a Hadoop professional that is capable of working with enterprise-scale data projects and contributing in analytics, data engineering, and business intelligence.

After successful completion of the course, the learner shall be duly awarded a certified and recognized Hadoop Certification in Hyderabad. This certifies the candidate’s knowledge concerning big data processing, tools of the Hadoop ecosystem, and analytics. This makes the candidate more employable, fills up land resume, and hence opens the gate for good paying jobs in IT, data engineering, and analytics domains.

Our alumni have been hired in top MNCs: TCS, Infosys, Wipro, Tech Mahindra, Cognizant

Classroom Training

Online Training

Corporate Training

| S.No. | Certification Code | Cost (INR) | Validity / Expiry |

|---|---|---|---|

| 1 | Cloudera CCA | ₹25,000 | 3 Years |

| 2 | Hortonworks HDP Cert | ₹22,000 | 3 Years |

| 3 | Hortonworks Data Engineer | ₹30,000 | 3 Years |

| 4 | MapR Hadoop Admin | ₹28,000 | 3 Years |

| 5 | Big Data Hadoop Expert | ₹35,000 | 3 Years |

Master big data processing and distributed computing.

Hands-on training with live projects.

Industry-recognized certification for career growth.

Placement support with top IT companies.

Affordable fees with EMI options.

Lifetime access to recorded sessions.

Learn from 10+ experienced instructors.

Enhance employability in big data, analytics, and IT roles.

Hadoop fundamentals and architecture.

HDFS, MapReduce, YARN, Pig, and Hive.

HBase, Spark, Sqoop, and Flume integration.

ETL processing and data workflows.

Big data analytics and machine learning basics.

Real-time data processing and cloud integration.

Fresh graduates.

IT professionals and software developers.

Data analysts and aspiring big data engineers.

Anyone aiming for a career in Hadoop in Hyderabad.

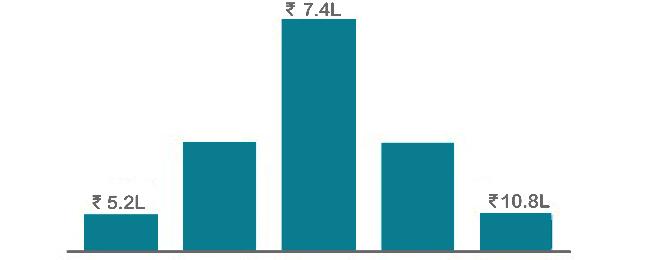

| Level | Role | Salary (LPA) |

|---|---|---|

| Freshers / Junior (0–3 years) | Hadoop Developer Trainee | 3–4.5 |

| Junior Big Data Analyst | 4–5.5 | |

| Hadoop Support Executive | 4–5 | |

| Mid-Level (4–8 years) | Hadoop Data Engineer | 5–8 |

| Senior Big Data Analyst | 8–12 | |

| Hadoop Automation Specialist | 8–12 | |

| Hadoop Lead | 8–12 | |

| Senior / Experienced (9+ years) | Principal Hadoop Engineer | 12–18 |

| Head of Big Data | 15–20 | |

| Hadoop Consultant | 18–25 | |

| Specialized Roles | Hadoop Security Expert | 10–15 |

| Hadoop Testing Specialist | 10–15 | |

| Hadoop Expert | 12–18 |

TCS

Infosys

Wipro

Tech Mahindra

Cognizant

Hadoop Training is offered to other cities as well as Hadoop Training in Chennai, Hadoop Training in Bangalore, Hadoop Training in Pune, and Hadoop Training in Delhi. While Infibee Technologies is providing hands-on training, experienced mentors, and placement support, which goes hand in hand with what candidates look for specifically in Hyderabad, that is what makes us the number one choice.

Step 1: Register for a Free Demo

Submit an inquiry form on our website.

Attend a free demo to understand the training methodology.

Step 2: Select Your Training Mode

Choose Classroom, Online, or Corporate training.

Confirm batch timing and convenience.

Step 3: Start Your Hadoop Journey

Learn from expert instructors.

Work on real projects and prepare for Hadoop Certification in Hyderabad.

Enhance your career through Hadoop Training in Hyderabad. Hands-on big data skills training coupled with certification and placement support so that you get into well-paying jobs! So enrol and begin accordingly into a Hadoop career today.

Upgrade Your Skills & Empower Yourself

Begin your journey into the world of big data with our Hadoop Course in Hyderabad! Covering essential topics such as Hadoop Distributed File System (HDFS), MapReduce programming paradigm, and Hadoop ecosystem components like HBase, Hive, Pig, and Spark, this course is designed to equip you with the foundational knowledge and practical skills needed to excel in the field of big data analytics.

Enroll in our Hadoop Training in Hyderabad, designed to offer top-tier instruction with a robust grounding in fundamental principles coupled with a hands-on approach. By immersing yourself in contemporary industry applications and scenarios, you will refine your abilities and acquire the proficiency to undertake real-world projects employing industry best practices.

By developing this Hadoop project, you will gain an understanding of the fundamentals of Hadoop architecture. You will discover how to retrieve the top 15 queries created over the previous 12 hours if you consider the scenario where a web server generates a log file containing a time label and question.

Large file management was a major design principle of Hadoop's Distributed File System (HDFS), as you would know if you had studied its architecture in detail. Reading through small files is a difficult process for HDFS since it requires a lot of searches and many trips between data nodes.

This is a well-known Kaggle competition to assess a recommendation system for music. The Million Song Dataset, made available by Columbia University's Lab for Recognition and Organisation of Speech and Audio, will be used by users. The dataset includes audio elements and metadata for one million popular and contemporary songs.

Educate your workforce with new skills to improve their performance and productivity.

The Hadoop Training in Hyderabad is designed to equip participants with comprehensive skills and practical expertise in the field of big data analytics. The objectives of this training include mastering core Hadoop concepts, applying acquired skills through hands-on projects, fostering critical thinking abilities, and preparing participants to tackle professional challenges effectively.

The objectives of the Hadoop training programme in Hyderabad are:

The course provides learners with practical information and the ability to handle real-world Hadoop issues. This skill improves their career chances by giving them a competitive advantage in the job market and helping with career advancement in the big data area.

The emphasis on real-world projects in the Hadoop training programme is crucial for providing participants with practical experience in applying Hadoop concepts to authentic scenarios. Through hands-on projects, participants gain invaluable exposure to industry-relevant challenges and develop the skills needed to address them effectively. This approach ensures that participants are well-prepared to tackle real-world Hadoop implementations and excel in their professional careers.

The Hadoop training programme is designed to accommodate participants with varying levels of experience. While prior knowledge of programming languages such as Java or Python and familiarity with basic concepts of data management may be beneficial, it is not mandatory. The training programme is structured to cater to beginners as well as experienced professionals looking to enhance their skills in big data analytics using Hadoop.

Throughout the Hadoop training programme, participants will have access to a variety of learning resources and support mechanisms to facilitate their learning journey. These resources may include comprehensive course materials, interactive lectures, hands-on labs, and real-world projects. Additionally, participants will receive guidance and mentorship from experienced trainers who are experts in the fields of big data analytics and Hadoop. Furthermore, regular assessments, feedback sessions, and dedicated support channels will be available to ensure participants receive the assistance they need to succeed in the programme.

1. Enhanced Employability: Acquiring Hadoop skills boosts your appeal to employers seeking Big Data expertise.

2. Lucrative Opportunities: Opens doors to high-paying roles like big data engineer and Hadoop developer.

3. Practical Experience: Gain hands-on experience tackling real-world data challenges.

4. Professional Growth: Mastering Hadoop concepts fast-tracks career advancement.

5. Industry Relevance: Stay abreast of the latest trends, ensuring long-term career viability in tech.

Our Job Assistance Programme offers you special guidance through the course curriculum and helps in your interview preparation.

Hadoop is a widely used big data framework that operates seamlessly across various computing platforms, from computers to mobile devices, without requiring frequent upgrades. It stands as one of the best career paths in the software development sector, with an average annual salary of 10 LPA.

Infibee’s placement guidance navigates you to your desired role in top organisations, ensuring you stand out and excel in every opportunity.

You need not worry about having missed a class. Our dedicated course coordinator will help them with anything and everything related to administration. The coordinator will arrange a session for the student with trainers in place of the missed one.

Yes, of course. You can contact our team at Infibee Technologies, and we will schedule a free demo or a conference call with our mentor for you.

We provide classroom, online, and self-based study material and recorded sessions for students based on their individual preferences.

Yes, all our trainers are industry professionals with extensive experience in their respective domains. They bring hands-on practical and real-world knowledge to the training sessions.

Yes, participants typically receive access to course materials, including recorded sessions, assignments, and additional resources, even after the training concludes.

We provide placement assistance to students, including resume building, interview preparation, and job placement support for a wide range of software courses.

Yes, we offer customisation of the syllabus for both individual candidates and corporate also.

Yes, we offer corporate training solutions. Companies can contact us for customised programmes tailored to their team’s needs.

Participants need a stable internet connection and a device (computer, laptop, or tablet) with the necessary software installed. Detailed technical requirements are provided upon enrollment.

In most cases, such requests can be accommodated. Participants can reach out to our support team to discuss their preferences and explore available options.

We offer courses that help you improve your skills and find a job at your dream organisations.

Courses that are designed to give you top-quality skills and knowledge.

Upgrade Your Skills & Empower Yourself